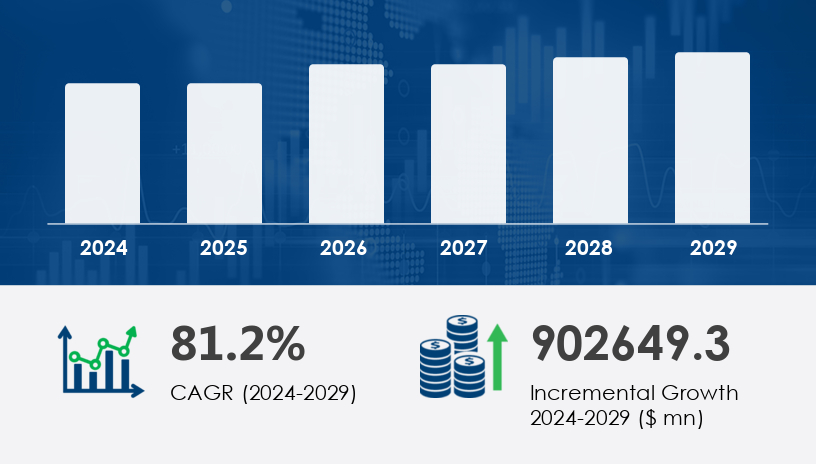

The Artificial Intelligence (AI) Chips Market is poised for remarkable expansion, with a projected increase of USD 902.65 billion between 2024 and 2029. This surge represents a compound annual growth rate (CAGR) of 81.2%, underscoring the critical role AI chips play in transforming industries ranging from healthcare to automotive. The market, valued in the tens of billions in 2024, is set to scale dramatically as demand for advanced, energy-efficient AI solutions continues to rise.

For more details about the industry, get the PDF sample report for free

A primary force behind the market's accelerated growth is the increased focus on developing AI chips for smartphones and data centers. As neural networks require substantial processing power to interpret massive datasets, traditional CPUs fall short. This has led data centers to integrate specialized AI chips such as GPUs, TPUs, and ASICs to optimize infrastructure, energy management, and server efficiency. By minimizing power consumption and improving uptime, AI chips not only enhance performance but also reduce operational costs. According to analysts, this evolution is enabling businesses to meet escalating AI demands while preserving resource efficiency.

One of the most transformative trends is the convergence of AI and Internet of Things (IoT) technologies. AI chips are increasingly embedded in IoT devices—ranging from smart TVs and drones to medical wearables—enabling on-device machine learning and real-time decision-making. Human-Machine Interface (HMI) integration within these devices is driving the deployment of AI chips, improving performance and energy efficiency. As AI applications grow in complexity, this convergence is creating power-efficient, intelligent systems that are shaping the next generation of industrial automation, healthcare diagnostics, and consumer electronics.

The Artificial Intelligence (AI) Chips Market is rapidly expanding, fueled by increasing demand for high-performance computing in AI applications. Core technologies include the neural processor, versatile AI chipsets, and the widely used graphics processing unit (GPU)—essential for deep learning and machine learning tasks. Innovations in application-specific integrated circuits (ASICs) and field-programmable gate arrays (FPGAs) are enabling specialized performance, while AI accelerators equipped with high-bandwidth memory and system on chip (SoC) architectures are improving parallel processing efficiency. These advancements directly support AI inference for tasks like computer vision and natural language processing, powered by architectures that utilize tensor cores. The integration of quantum computing is also being explored for future scalability. Applications ranging from generative AI to edge computing and cloud computing depend on chips optimized for AI training and rapid data processing, all underpinned by highly adaptive neural network frameworks and predictive analytics engines.

By Product

ASICs

GPUs

CPUs

FPGAs

By End-user

Media and advertising

BFSI

IT and telecommunications

Others

By Processing Type

Edge

Cloud

By Application

Natural language processing (NLP)

Robotics

Computer vision

Network security

Others

By Technology

System on Chip (SoC)

System in Package (SiP)

Multi-Chip Module (MCM)

Others

By Function

Training

Inference

Among all product categories, Application-Specific Integrated Circuits (ASICs) stand out as the fastest-growing segment through 2029. These chips are custom-designed for AI workloads, offering significantly better performance and energy efficiency compared to general-purpose GPUs, CPUs, and FPGAs. In 2019, the ASICs segment was valued at USD 4.73 billion, with continuous growth driven by their adoption in deep learning, robotics, and generative AI applications. Analysts note that ASICs are critical for real-time AI data processing, particularly in AI data centers and edge computing environments. Their optimized architecture allows for parallel algorithm execution and local processing, positioning them as foundational to AI infrastructure.

Covered Regions

North America

Europe

APAC

South America

Middle East and Africa

Rest of World (ROW)

North America leads the global Artificial Intelligence (AI) Chips Market, contributing an estimated 42% to overall market growth from 2025 to 2029. The region's dominance stems from the high concentration of AI chip manufacturers, such as NVIDIA, AMD, and Intel, and robust investment in AI data centers. Companies are deploying AI for applications ranging from autonomous vehicles to financial fraud detection, fueling demand for high-performance chips. Analysts highlight that ongoing developments, such as the Trainium2 chip for generative AI, are reinforcing North America’s leadership in AI innovation. With mature cloud infrastructure and regulatory support, the region is expected to maintain its market advantage throughout the forecast period.

See What’s Inside: Access a Free Sample of Our In-Depth Market Research Report

Despite rapid growth, a significant obstacle for the Artificial Intelligence (AI) Chips Market is the shortage of technically skilled professionals. Developing high-performance AI chips requires expertise in hardware engineering, machine learning, and advanced computing architectures—skills that are currently in short supply. This talent gap is hampering R&D efforts and delaying time-to-market for new chip innovations. Furthermore, high development costs exacerbate the challenge, making it difficult for companies to scale their AI initiatives. According to analysts, organizations must invest in education partnerships and internal training programs to bridge this gap and fully capitalize on the market opportunity.

Recent market research highlights a shift toward optimized chips designed for real-time analytics, improved energy efficiency, and refined AI algorithms for faster, more accurate results. Techniques like data compression and enhanced support for image recognition, speech recognition, and anomaly detection are driving market competitiveness. The rising complexity of AI workloads has led to increased investment in hardware acceleration solutions, including multi-chip modules and high-performance digital signal processors (DSPs). Scalable deployment of AI models now relies on efficient data pipelines, intelligent feature extraction, and improved text analytics capabilities. Voice applications using voice synthesis, as well as multi-modal functions enabled by sensor fusion, are contributing to a broader demand for adaptive chip design. Additionally, infrastructure components like the AI server, enhanced memory bandwidth, and increased compute power are essential to meet the growing demands of modern AI systems. Security-focused features like data encryption are also becoming standard in chip architectures to support compliant and secure processing environments.

Research in the AI chips sector is heavily focused on architectural innovations, energy efficiency, and hybrid computing capabilities that can handle next-gen workloads. As AI applications diversify across industries—from autonomous vehicles to personalized medicine—chipmakers are racing to develop more specialized, scalable, and secure silicon solutions. The convergence of software and hardware through co-designed systems is expected to redefine performance benchmarks, with strong momentum toward edge-native AI processing and sustainable compute design.

Innovation and strategic partnerships are at the core of competition in the AI chips market. Recent developments include:

In January 2025, NVIDIA launched the Blackwell NVL72 AI supercomputer, enhancing inference processing efficiency by 30% for data centers.

In February 2025, AMD acquired photonic IC startup Enosemi, aiming to improve data transfer speeds by 25% through co-packaged optics.

In March 2025, Intel collaborated with TSMC to produce custom AI fabric chips using the 18A process, targeting a 20% boost in cloud AI production by 2026.

In April 2025, Qualcomm expanded its i.MX 95 AI processor line to Europe and APAC, focusing on automotive and industrial AI markets, with projections to capture 15% edge AI market share by 2027.

Companies are also diversifying their AI portfolios with new chips tailored for natural language processing, robotics, and autonomous systems. These strategic moves underline a shared industry objective: to deliver faster, energy-efficient AI chips capable of powering next-generation AI applications across sectors.

1. Executive Summary

2. Market Landscape

3. Market Sizing

4. Historic Market Size

5. Five Forces Analysis

6. Market Segmentation

6.1 Product

6.1.1 ASICs

6.1.2 GPUs

6.1.3 CPUs

6.1.4 FPGAs

6.2 End-User

6.2.1 Media and advertising

6.2.2 BFSI

6.2.3 IT and telecommunication

6.2.4 Others

6.3 Processing Type

6.3.1 Edge

6.3.2 Cloud

6.4 Application

6.4.1 NPL

6.4.2 Robotics

6.4.3 Computer vision

6.4.4 Network security

6.4.5 Others

6.5 Technology

6.5.1 SoC

6.5.2 SiP

6.5.3 MCM

6.5.4 Others

6.6 Function

6.6.1 Training

6.6.2 Inference

6.7 Geography

6.7.1 North America

6.7.2 APAC

6.7.3 Europe

6.7.4 South America

6.7.5 Middle East And Africa

7. Customer Landscape

8. Geographic Landscape

9. Drivers, Challenges, and Trends

10. Company Landscape

11. Company Analysis

12. Appendix

Safe and Secure SSL Encrypted